Text Michael Siedenhans ––– Photography

Markus Schwarz’s everyday routine focuses almost entirely on one goal – reliable data centre processes. “Data centre services must be available 24/7. We can’t afford a system failure,” says the Data Centre Manager from Arvato Systems in Gütersloh. He is non-committal about what the future holds. “Nobody can predict exactly how data centre requirements will change in the next three, five or ten years,” emphasises the IT expert. Many data centre operators and IT managers are in the same situation as Schwarz. They need to adapt to the onward march of digitalization, but how can they plan with any certainty for an unknown future? Is it possible to design a data centre that is both geared closely to current requirements and, at the same time, future-proof?

“Yes!” is the reply from physicist Michael Nicolai, Head of Sales IT Germany at Rittal. Growing power and cooling requirements are one key aspect in this regard. For example, the Borderstep Institute for Innovation and Sustainability predicts that the total power consumption in Europe’s data centres is going to climb from its current level of 80 terawatt-hours to 90 terawatt-hours by 2025. “The cooling of servers and IT racks alone accounts for up to 40 per cent of a data centre’s total energy consumption,” reveals Nicolai. This calls for scalable and energy-efficient cooling solutions.

IT EXPERTISE ENCAPSULATED

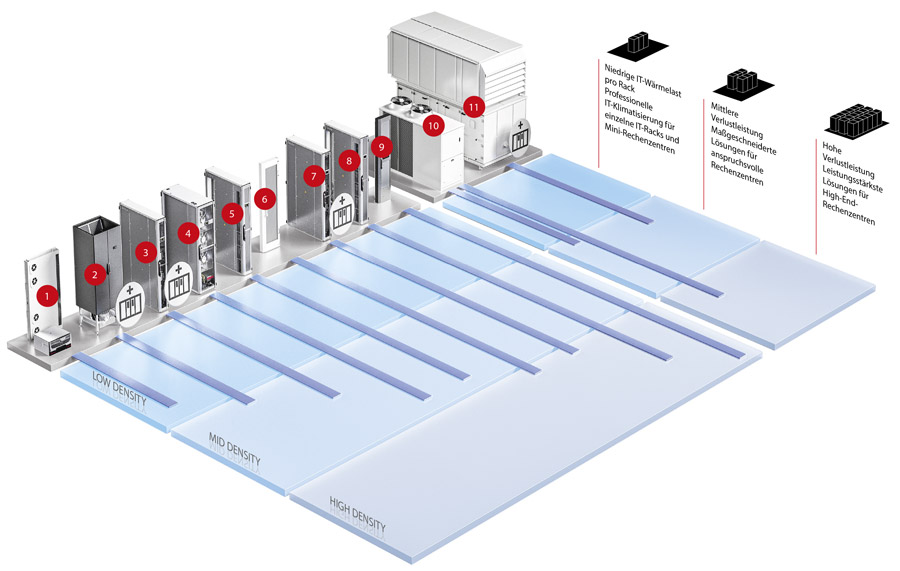

The experts at Rittal are in dialogue with data centre operators across the globe and therefore understand these challenges. Their response is the open IT infrastructure platform RiMatrix Next Generation – a modular solution that enables data centre operators to plan for the future right now. “We’ve put all our IT expertise into RiMatrix Next Generation. We’ve expanded our portfolio, which means we’re making it possible to create extremely future-proof and flexible solutions,” underlines Nicolai. The IT platform works like an open, modular system – a matrix – with individual components that fit together like Lego and can be extended and scaled as required.

The basic modules are IT racks, to which further components for climate control, power supply and backup, IT monitoring and IT security can be added. As a result, all kinds of IT infrastructure solutions can be developed in a range of sizes – from standalone racks, IT containers and edge data centres to colocation, cloud and huge hyperscale data centres. The unique feature here is that new components are always compatible with existing and older ones. This means every aspect of an IT platform can be modified, including size, performance, security and fail-safe operation. Regardless of their plans for the future, data centre operators worldwide always obtain the exact solution they need for their regional requirements. Service providers and partners certified by Rittal deal with local specifications on site, which has one major advantage: “This approach avoids technical overkill, because data centre operators only apply technology that is of use to them,” explains Nicolai.

NEEDS-BASED COOLING

Returning to the subject of energy-efficient cooling, Nicolai is an expert when it comes to optimum climate control in data centres. He is regarded as the father of the LCP (Liquid Cooling Package) at Rittal. “Around the world, we always look for the best local solutions to generate needsbased cooling power. This might mean something quite different in Sweden than it does in Germany, and also in a highperformance data centre as opposed to a centre with a low or medium output,” continues Nicolai.

FREE ROOM COOLING

Conventional room cooling based on a high-precision climate control system and an air circulation system is widely used. Cooling is taken care of by a CRAC (Computer Room Air Conditioning) unit that operates in the same way as a conventional air-conditioning system. Cold air is blown into the data centre and routed to the racks via a raised floor. Using CRAC systems for cooling is a suitable approach for data centres with a low or medium output per IT rack, while air handling units (AHUs) and precision air handling units (PAHUs) are appropriate for large hyperscale data centres. These units dissipate heat directly outdoors, which means they deliver a high cooling output without taking up floor space.

Furthermore, if the outside air at the location is cooler than the air being expelled from the IT systems, no active cooling system is required (principle of indirect free cooling). Otherwise, external air/water heat exchangers (outdoor chillers) are used to cool the warm water from the data centre to temperatures down to 20 degrees and less. “Rittal has optimised this traditional procedure for climate control in data centres with outputs extending into the megawatt range,” reveals Nicolai. Ideally, when using this cooling method, power is needed merely to drive the fans of the free cooler and potentially the cold water pumps. “The efficiency of this solution depends to a great extent on the local climatic conditions. Naturally, a data centre can operate far more cost-effectively in the cold climes typical of Scandinavia than in the warm conditions that prevail in southern Europe,” continues Nicolai.

INDIRECT ROOM COOLING

Indirect free room cooling is an alternative solution that is being used at the 1-megawatt data centre of media company Deutsche Welle. The system only generates cooling power as and when required, which achieves power savings of up to 60 per cent by preventing oversupply. Rittal has been able to include this module in its portfolio since it started working with Stulz (see the News section on page 16). This Hamburg-based company specialises in climate control in data centres. The new IT infrastructure platform therefore includes high-performance cooling components such as free cooling systems, side coolers and indoor chillers. “This collaboration adds the finishing touch to our data centre cooling portfolio, in the shape of a standard closed-circuit air conditioning unit in all design variants, and gives us access to the company’s pipework service for larger projects,” says Nicolai.

RACK-BASED COOLING

It is widely assumed that data volumes and thus power densities in data centres will increase. This is already the case in high-performance data centres, where servers are concentrated in the smallest of spaces. As a result, up to 30 kilowatts of energy is consumed in an area of just one square metre. Conventional raised floor structures are no longer able to quickly dissipate the waste heat generated, which is where the LCP (Liquid Cooling Package) family of rack-based cooling solutions from Rittal comes in. “It makes the most sense both technically and in terms of cost-efficiency. These rack-based solutions are the only option for the most demanding performance requirements,” emphasises Nicolai before going on to explain the principle of the concept he developed. “The hot air in the room isn’t fed into the normal air circuit. Instead, it’s extracted by the LCP directly at the rear of the servers, cooled using the heat exchanger and reintroduced at the front. LCPs react at lightning speed, which is ideal for a high-performance data centre,” he says.

EFFICIENT CHIP COOLING

Since air is a poor conductor of heat, however, direct CPU cooling will play a key role in the future. This is conventionally implemented as water cooling. One alternative is innovative direct chip cooling with advanced refrigerants. This IT cooling method helps achieve the highest efficiency values. The new water-free, two-phase liquid cooling system from Rittal and ZutaCore is one example. “By changing the state of matter, this solution absorbs more thermal energy from the chips and does so faster. Its exceptionally high-performance cooling takes place precisely where hotspots and performance peaks occur.

This enables efficient, fail-safe operation of high-performance data centres and minimises IT failures,” explains Nicolai before summing up as follows: “With RiMatrix Next Generation, our portfolio now includes cooling solutions for everything from individual racks and both suite and room climate control through to the Liquid Cooling Package and direct chip cooling for sophisticated high-performance data centres. Using Rittal cooling units for direct rack climate control can result in energy and CO2 savings of up to 75 per cent.” The energy-efficient, cost-cutting cooling concepts in the RiMatrix Next Generation modular system therefore make it possible for any data centre operator to invest for the future right now, even though what lies ahead is somewhat unpredictable. After all, regardless of data volume and power density, a data centre can only benefit from reliable, fault-free operation if it is cooled effectively.